Imagine that you are a Vice President of HR at a large services organization, you just completed a quarterly virtual all-hands meeting with your extended department, and you followed up with a lengthy e-mail, a portion of which went like this:

Thanks, all, for attending today’s quarterly meeting.

As we discussed, we have several new initiatives post-pandemic to get us all back “into the groove” after our extended period of work-from-home. …

For our next meeting, we’ll all be reading We’re All in This Together: Creating a Team Culture of High Performance, Trust, and Belonging by Mike Robbins (a copy is being shipped to you now), and we will discuss it in depth at our next quarterly meeting, which will be June 3, 2023. …

Additionally, we’ll be forming task groups to work on the HCM deliverables for this year – your name appears below in your group with the specific aspect of our agenda to which you’ve been assigned…

Within an hour, you receive a response from Jesse, one of your team members, that includes the following:

Thanks for the follow-up message. I thought the meeting was great, and I look forward to “hitting the ground running” on these new initiatives. I’ve already put a hold-the-date on June 3 to be sure I’m available for the next meeting, and I can’t wait to work with Jim, Jennifer, Julia, and Justin on our task group’s aspects of the year-long HCM deliverables. I’ve also already read Robbins’ book, but I look forward to re-reading it. I particularly liked his advice on fostering an environment of psychological safety, advocating for inclusion and belonging, addressing conflict, and maintaining a healthy balance between high expectations and personal empathy.

You finish reading this note – the only one you received from those to whom you sent the original e-mail – and are duly impressed. Although Jesse is a relatively new addition to the team, he certainly appears to be a go-getter!

Here’s what you don’t know:

- …he never attended the meeting,

- …he never read the Robbins book (as he claimed), and

- …he didn’t even write the reply e-mail you just received – an AI “bot” did.

AI Comes Into Its Own, Quite Suddenly

On January 23, 2023, Microsoft announced the latest phase of a long-term partnership with OpenAI, dedicated to advancing Artificial Intelligence technologies to achieve, among other things, “supercomputing at scale, new AI-powered experiences, and [being the] exclusive cloud partner” of OpenAI via Microsoft’s Azure platform.

Significantly, the Microsoft announcement went on to explain, “We formed our partnership with OpenAI around a shared ambition to responsibly advance cutting-edge AI research and democratize AI as a new technology platform.” The words “responsibly” and “democratize” do a lot of work in this assertion. The casual reader might wonder what responsibility and democratization have to do with AI, and to paraphrase the Bard, “Methinks the spokesperson might protest too much…” In other words, regarding the more general use of AI, “what could possibly go wrong?”

Within a few short days of this announcement, the following articles appeared across the internet, all popping up in featured headlines:

- From the healthcare metaverse: “AI Bot ChatGPT Passes US Medical Licensing Exams Without Cramming – Unlike Students” 1

- From the education metaverse: “ChatGPT Passes MBA Exam Given by a Wharton Professor” 2 and “ChatGPT Banned in Some Schools…” 3

- From the compliance metaverse: “AI Rockets Ahead in Vacuum of US Regulation” 4

Additionally, and perhaps not coincidentally, on January 26, 2023, the Department of Commerce’s National Institute of Standards and Technology issued version 1.0 of its Artificial Intelligence Risk Management Framework (AI RMF 1.0). 5

At about this same time, the Brookings Institution, a leading nonprofit public policy organization, published an excellent commentary entitled “NIST’s AI Risk Management Framework Plants a Flag in the AI Debate,” 6 authored by Cameron F. Kerry, a Brookings Fellow and former Commerce Department leader.

Noting the recent rapid development of AI capabilities, Kerry explains that the AI RMF provides “two lenses” through which to view these developments: (a.) a conceptual roadmap for identifying risks in the context of AI, and (b.) a set of organizational processes and activities that can be used to manage those risks. While this commentary does not focus specifically on labor supervision practices and the problem organizations may face where employees use AI in unapproved ways, the framework it establishes can be helpful in this situation and much more! In particular, the commentary points out, multi-national organizations will need to monitor the development of standards, rules, and restrictions by various regions, with the European Union, typically, likely leading the pack and building on the restrictions on AI use under the General Data Protection Regulation (GDPR) 7 that are already in effect.

The Promises of AI, Fulfilled

Before focusing on the burgeoning concerns about AI utilization, it is essential to highlight the many areas where the benefits of AI are improving HCM processes and delivering on long-standing promises for HR.

Recruiting: AI-enhanced candidate screening has been an essential feature of the recruiting system for well over a decade. As job boards, recruiting sites, and social media tools have made it increasingly easy for candidates to apply for jobs – now with “one click,” and regardless of their qualifications, recruiters have found it increasingly necessary to perform mass screening of those job applicants. Supporting good “CRM” (candidate relationship management) practices, AI can now be deployed to ask various qualifying questions, employ deductive logic to move the candidate through those questions, and, of course, terminate the application where the candidate does not meet minimum requirements.

HR Service Center: A foundational principle of the service center software underpins automated HR Service Delivery — that employee inquiries and service requests must be stratified by complexity and routed accordingly. The more of these inquiries that can be “pushed down to” and thoroughly addressed by employee self-service, the lower the per-inquiry cost to the employer.

Sometimes referred to as “Tier 0 Support” (so named for the amount of time needed by a human support representative to resolve – zero), this level of solution originated simply as online documentation for employees to read through on their own, hoping that they will find their answer. Unfortunately, with a wide variance in employees’ abilities to check out these answers independently, Tier 0 support features in HR service center applications initially floundered.

Adding AI to HR service center applications has been a true game changer.

Essentially leveraging the same technology and logic that is ubiquitous in online shopping sites, AI within the HR service center application uses semantic search and content-based filtering to resolve an employee’s issue. Incorporating the latest AI innovations, chatbots can turn this interaction from a stilted keyword exchange to a more human-like “conversation.”

Simply put, the smarter the AI engine can make the HR service center software, the more employee support transactions can be successfully resolved at “Tier 0,” resulting in lower organizational costs – and a happier workforce (due to reduced resolution times and the increased satisfaction of having resolved a question instantly).

Workforce Analytics: Workforce analytics were largely retrospective analyses when first introduced to the HCM marketplace in the early 2000s. The most significant measure of their innovation was in the presentation layer – charts and graphs replacing simple listings and tabular reports. With the recent incorporation of artificial intelligence into these analytics, HR professionals can finally transition from a “rearview mirror” pure reporting focus to root cause analysis and basic predictive analytics.

The Threats of AI, Misused

Threats related to the misuse of artificial intelligence technology by employees must be interpreted in the context of the current employment situation, which in 2023, is going through substantial change. Coming out of the pandemic, very high rates of remote work are decreasing toward, but usually not yet reaching pre-pandemic norms. Indeed, the combination of virtually full employment (50-year low 3.4% unemployment rate and more than two open positions for every unemployed individual) and a new zeal for work-from-home convenience as experienced by workers at the height of the pandemic, has changed the work location dynamic fundamentally for large swaths of the US labor market. Employees want it – sometimes prioritizing it over pay raises. However, their employers don’t necessarily agree.

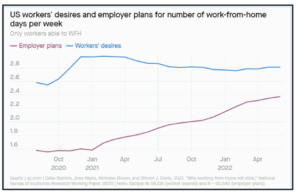

The World Economic Forum has been tracking the comparison of workers’ desires vs. employer plans 8 for work-from-home on a days-per-week basis, and the two-year period from mid-2020 through mid-2022 shows a narrowing but still measurable gap. (See Figure 1.)

Figure 1.

As the gap between employee and employer desires for work-from-home days narrows and agreement is reached, for those employees in work-from-home eligible jobs, the likely average number of such days permitted will be about 2.5 per week, around 50% of total time working. This would be a significant increase from the average work-from-home days seen in the years before the pandemic when only 6% of employees worked remotely on a primary basis.9

With such a dramatic increase in the percentage of time remote (or hybrid) employees can work from home comes the inevitable potential for abuse. While it may be true that most employees in jobs eligible for remote or hybrid work-from-home status would be salaried and/or exempt and personally trusted to put in the work during regular working hours, there will always be the exceptions which will be less engaged and require more active monitoring.

Since well before the pandemic, tools existed to help ensure that employees were where they said they were when clocking in or signing in for work. These include IP address monitoring, where the location of the employee’s computer can be approximated from the internet protocol address of the connection. Similarly, geo-fencing can incorporate GPS technology and restrict the places an employee can clock into time management systems and/or the employer’s production servers. Of course, tech-savvy employees can defeat some of these technologies using IP spoofing or other evasion techniques, but most employees will not have the technical skills to do so.

OpenAI Premieres ChatGPT, and the Threat Increases

The US-based research lab OpenAI, started in 2015 by a group of entrepreneurs, including Elon Musk (who has since exited the organization), had arguably been one of the most influential developers of artificial intelligence technology for business applications. It was in January of this year that OpenAI, as the saying goes, “blew up the internet” with its announcement of a new protocol available for commercial use called GPT-3 (and its application, ChatGPT).

What is GPT-3? According to sciencefocus.com,10 “GPT-3 (Generative Pretrained Transformer 3) is a state-of-the-art language processing AI model developed by OpenAI. It can generate human-like text and has a wide range of applications, including language translation, language modeling, and generating text for applications such as chatbots. In addition, it is one of the largest and most powerful language processing AI models to date, with 175 billion parameters.” And if that description sounds like bragging, consider this: sciencefocus.com obtained that description from GPT-3 itself when they asked a chatbot to describe its capabilities!

OpenAI is rippling through every aspect of society right now, with (as noted previously) test runs showing it can pass and even excel at medical and legal professional exams. Microsoft has embedded the technology in its Bing search engine. Many school districts have banned their students from its use. (Even this article’s author, while writing this piece, had a sometimes-helpful, sometimes-not “co-author” in the form of Microsoft Word’s “Text Predictions: On” setting with the program constantly suggesting completions to his thoughts!)

Employees could use the same AI technology to produce work products ranging from e-mails to full reports. But could the future for unsuspecting employers include the occasional employee who sets their AI technology engine(s) on and then heads to the beach for the day?

What’s An Organization To Do?

The solutions that employers will ultimately deploy to address the challenge of employees using AI in unapproved ways fall under the broad categorical heading of “employee monitoring software.” While it is fair to say that this category is in its infancy, it is also growing fast.

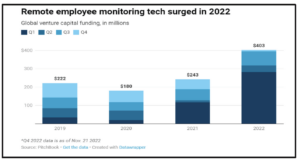

How fast? According to TechBrew11(see Figure 2), in 2020, venture capitalists invested $180.5 million in the space, but in 2022, as of November of that year, the year-to-date investment had already reached $403 million. Clearly, the VCs see the same confluence of a fast-growing remote/hybrid workforce, the need to oversee that workforce, and the potential for AI to contribute to lower productivity for individual employees.

Figure 2.

There are specific steps that cautious employers can take with technology readily available today to try to head off these new AI challenges:

- A mandate for the use of only corporate-owned technology may or may not be practical (e.g., where the company does not provide mobile devices to employees who must use their personal devices to stay connected while traveling on business), but this is the basis for protecting against AI misuse,

- Mandated use of VPN connections may also aid in this regard, but many employers find that performance issues can plague VPN-mandated connections for large meetings over platforms like Teams or Zoom, and,

- An essential step on corporate-owned laptops and desktop PCs would be to use software installation restrictions – the programs that regularly inventory all software on a machine, reporting any unrecognized or unapproved software to Corporate IT, and/or restricting the kinds of software that can be installed.

Conclusion

AI as a technology is entering a new phase of deployment which can present significant challenges to HR departments. Given trends like low unemployment and plentiful job openings, a strong desire by many employees to work from home, and challenges to employee engagement so great that they sometimes culminate in “quiet quitting,” AI chatbot technology will tempt some employees to coast, appearing to be “more present” than they are. In the face of this emerging technology trend, employers will need to make decisions about the extent to which they will monitor their workforce and the tools they will use to keep them engaged.

==============================================

Editor’s Note:

The speed at which AI development advances in this country and worldwide is astounding. Indeed, just within the 6-8 weeks since this article was written, there have been several continuing developments in this story:

- Chat-GPT 4 has been announced as now in “initial availability,” replacing the previous version, which emerged only months before. The Open AI announcement for Chat-GPT 4 proudly asserts that the scores achieved by version 4 bots in taking the Uniform Bar Exam progressed to the 90thpercentile of all test takers (vs. the 10th percentile for earlier versions) and in taking the Biology Olympiad tests, the new bots tested out at the 99th percentile (vs. the 31st percentile for earlier versions.)

- This increased speed of development leads many AI scientists to warn that we are approaching the point of “singularity” faster than ever. Singularity can be considered the future point when artificial intelligence will have surpassed human intelligence. (Now might be a good time to re-watch the Stanley Kubrick film “2001: A Space Odyssey,” paying particular attention to the role of “HAL.” But, of course, it was a futuristic satire – or so we thought then…)

- Given all that has happened over the past few months, Congressional legislators are suddenly paying more attention than ever to the impact AI may have on our society. Less than a month ago, Congressman Ted Lieu (D-CA) introduced a non-binding measure requiring Congress to examine this impact in the House of Representatives. This resolution was written entirely by … wait for it … artificial intelligence.

Endnotes

1 AI Bot ChatGPT Passes US Medical Licensing Exams (medscape.com), https://bit.ly/40EC5IO

2 ChatGPT Passes MBA Exam Given by a Wharton Professor, https://bit.ly/3n6nKqQ

3 ChatGPT Banned in Some Schools…, https://bit.ly/448djUf

4 AI rockets ahead in the vacuum of U.S. regulation (axios.com), https://bit.ly/423WbNP

5 Artificial Intelligence Risk Management Framework (AI RMF 1.0), https://bit.ly/3AvC2nT

6 AI Risk Management Framework Plants a Flag in the AI Debate, https://bit.ly/3n2zmLB

7 Artificial Intelligence In Recruitment: Data Protection, https://bit.ly/3Nxkwrp

8 The World Economic Forum, https://bit.ly/3VfKwJt

9 Remote Work Before, During, and After the Pandemic, https://bit.ly/3HfIqDQ

10 Sciencefocus.com, https://bit.ly/443plOG

11 TechBrew, https://bit.ly/441VPci