Introduction

Every organization has an organizational structure where the manager leads a group of employees. The manager/leader is supposed to give feedback to each team member and decide on the bonus/annual increase or decrease. Few companies have “360-degree” feedback mechanisms, but almost all have one-way feedback from managers to team members. Feedback is expected to impact the bonus (based on the role and company, some have quarterly, and some are annual) and a yearly pay increase. First, feedback is critical for employees as it impacts the money they take home, but most importantly, it also affects their professional life and personal brand. Therefore, getting the correct feedback is crucial for an employee. However, most importantly, performance-based input at the earliest possible will help employees to correct their mistakes and fine-tune their performance and self-image in the professional environment.

Challenges with Current Feedback Mechanisms

Giving accurate feedback without bias is very challenging for a human being. Knowing the importance of feedback, companies organize all possible training for their managers on how to give feedback, what to talk about in the discussion, what not to talk about, and how to avoid bias. The other big issue in feedback is how long it takes to get the feedback. While some companies recommend that managers provide active and instant feedback, it can be very challenging for managers to communicate with team members as they are busy managing their daily professional/personal life. Therefore, there needs to be a process to assist them in giving feedback on an active occurring basis (as it is impossible). This ambitious challenge is the topic this article addresses; given the popularity of language models and AI, is it possible to automate the feedback mechanism so people can get feedback right and improve their brand value while saving time?

Large Language Models

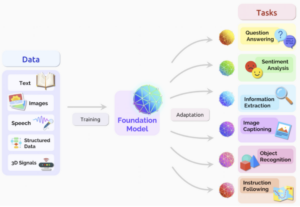

Large language models (LLMs) are foundation models that utilize deep learning (Advanced Machine learning). Large language models are pre-trained on a vast amount of data to support learning the complexity and linkages of language. Using techniques such as:

- Fine-tuning

- In-context learning

- Zero-/one-/few-shot learning

These models can be adapted for downstream (specific) tasks (see Figure 1).

Figure 1. Foundational model, Source: ArXiv

In simple terms, Large Language models are complex machine learning models trained using vast amounts of data to answer any possible question. ChatGPT is an extensive language model version, as is Bard from Google. Though developing a good model for the use case is essential, relevant data plays a critical role in its performance.

What Kind of Data We Have

With modern data collection tools, we have a lot of sophisticated tools to collect data. Some data examples are: When employees enter the office, when they leave the office, how many times they leave the building and how fast they walk in the corridor, how many hours employees spend inside the office, how many meetings they attend, and their level of participation in discussions. These are some basic ones, but many can be added to the list based on the company infrastructure.

Since most roles are virtual post-covid, we can also tailor the data collection method for virtual employees. For example, basic things such as; active screen time, number of characters typed by employees, keyboard typing speed, distraction (opening different screens and not spending time on a particular task), tone of responses in emails, and emotions they use in the conversation, can all be quantified and gathered.

A significant additional issue in data collection/storage is how we correlate the data and attribute it to a particular person. Does this type of data collection jeopardize user privacy in an era where no one wants to lose their privacy?

User Privacy

Recently, there has been much research on data privacy and security. Using best-in-class storage methods, we can assure employees that the chance of data getting leaked is nil. But beyond that, we can also anonymize the data. That way, user identification is protected. Furthermore, we can aggregate the data for common feedback, i.e., team feedback, so nothing is attached to the user. Additionally, we need to publish strict rules that the data has to be used only for input and employee improvement in the workplace.

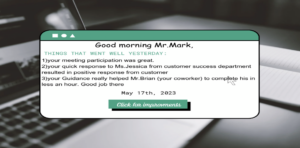

Daily Feedback

Given that we have many data and large language models (LLM, such as ChatGPT)that can be deployed with minimal cost, we can use the LLMs to give timely daily feedback. So, we collect the data daily and feed it into the model. Then, the next day, when an employee starts their work, we can initiate feedback from LLM on the screen. This feedback can be as simple as the top 3 things that went well and a list of things to improve. This feedback helps employees understand their impact on the company and helps them visualize their future potential. We can also share the feedback with managers (Figure 2) so they know the content and context of employee feedback and set the stage for success.

Figure 2. Daily Feedback

Loop the Feedback and Improvement to the Annual Review

During annual reviews, managers can actively review how the employee corrected the mistakes and build on positive feedback. The advantage of this method is that employees know what they will hear in the annual review. Also, the chances of managers introducing bias in the review process are almost nil. Managers can also celebrate the quick wins and improvements in the team, which can ultimately boost morale.

Conclusion

With regular feedback, we are addressing one of the significant challenges in resource retention. We give feedback to employees, which will positively impact both the employee and the workplace. It also helps to retain top talent and avoid bias in the feedback. For managers, this feedback tool saves managers a lot of time and resources while improving the quality of individual feedback sessions. Finally, these tools enhance feedback frequency without compromising privacy so all parties benefit.

References

1 Foundation Models: Definition, Applications & Challenges in 2023 (aimultiple.com)

2 What is Deep Learning? Use Cases, Examples, Benefits in 2023 (aimultiple.com)

3 What is Few-Shot Learning? Methods & Applications in 2023 (aimultiple.com)

5 How to Conduct a Great Performance Review (hbr.org)

7 Large Language Models: Complete Guide in 2023 (aimultiple.com)

8 Google AI Blog (googleblog.com)